Introduction

Rasa is Open source conversational AI apparatus.

NLU in Rasa represents Natural Language Understanding. Befuddled among NLP and NLU? Well NLP represents Natural Language Processing while NLU is short form Natural Language Understanding. Both offer a shared objective of understanding the collaboration between spoken language (e.g English) and the language of the computer. Rasa NLU is Natural Language Processing framework for aim characterization, named retrieving responses, element extraction and some more. It is exceptionally used for - distinguish sentiments, conversational chatbots, Named-Entity Recognition and recognizing the reason for a sentence (intent).

Objective

In this article, we are going to talk about common issues while developing a bot with RASA framework. We will also discuss ways to overcome these by functionalities provided by RASA and few other tricks applicable across all bot development frameworks.

Common Issues

Generating NLU Data

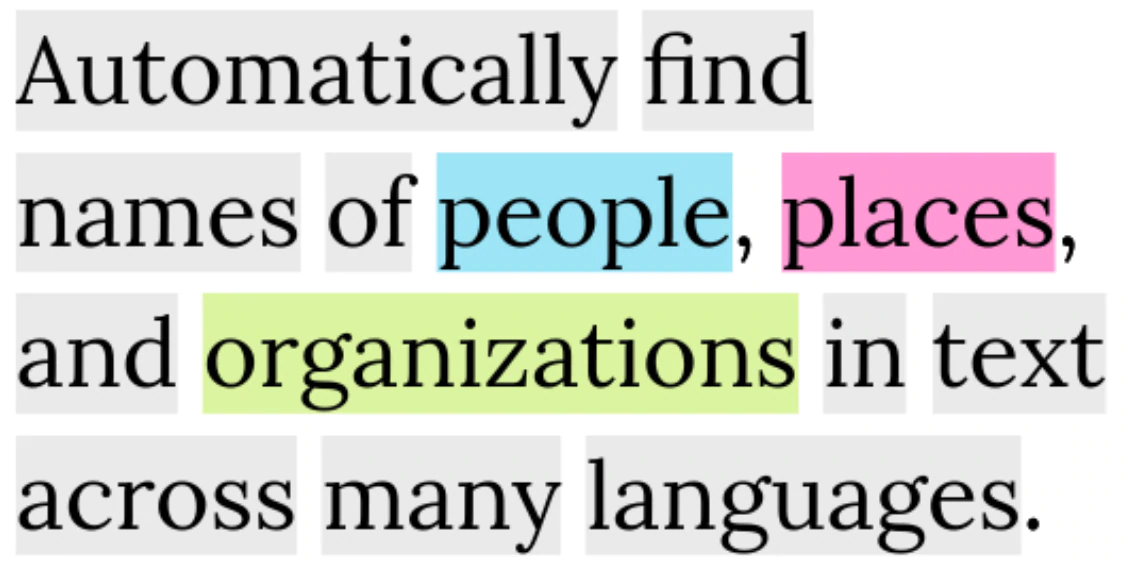

NLU (Natural Language Understanding) comes bundled with the Rasa framework that performs intent identification, entity extraction, and response retrieval.

NLU will take in a user query such as “Please transfer 500 $ to Mike” and return structured data like:

{

"intent": "fund_transfer",

"entities": {

"amount": "500",

"beneficiary": "Mike"

}

}

The major pain factor for any problem that we choose to solve with ML/AI is data and NLU problems are no different. Building NLU models is tough, and building ones that are production-grade is even tougher. Below are some useful tips for designing your RASA NLU training data to get the most out of your chatbot.

Conversation-Driven Development for NLU

Conversation-Driven Development (CDD) means letting real world user conversations guide your bot building process. This process will help your bot understand conversations like common slangs, synonyms or abbreviations that are hard for a bot developer to remember and incorporate in training data.

Capture Real Data

With regards to working out RASA NLU training data since it requires a lot of data to get trained efficiently, bot builders frequently depend on text generation tools and programs to rapidly build the number of right training examples for preparing models. These tools are great for building bulk training data. While this methodology can be efficient, it makes NLU models inclined to overfit information that isn’t illustrative of things a genuine client would state. The variance in any data set is key for a well-trained NLU model.

To dodge such an issue, it is a smart thought to gather consistently however much genuine client interactions or conversations as could be expected to use as training data. Despite the fact that your bot will commit errors at first, the way toward preparing and assessing on client interactions will empower your model to sum up substantially more successfully in real world situations.

Did you know?

Chatette is a Python program that generates training datasets for Rasa NLU given template files. If you want to make large datasets of example data for Natural Language Understanding tasks without too much of a headache, Chatette is a project for you.Specifically, Chatette implements a Domain Specific Language (DSL) that allows you to define templates to generate a large number of sentences, which are then saved in the input format(s) of Rasa NLU.

Share with close group users Early

So as to accumulate genuine training data, you will require genuine client messages. A bot designer can just concoct a restricted range of examples, and users will consistently astonish you with what they state. This implies you should impart your bot to test users outside the advancement group as ahead of schedule as could be expected under the circumstances. See the full CDD guidelines for additional subtleties.

Avoiding Intent Mismatch

Intents are characterized utilizing character and word-level features generated from your training examples, contingent upon what featurizers you’ve added to your NLU pipeline. At the point when various intents contain similar words requested along these lines, this can make disarray for the intent classifier.

Making smart use of Entities

Intent mismatch or confusion often occurs when you want your assistant’s response to be conditioned on information provided by the user, the user query will match to more than one intents with high confidence. For example, “How do I block my debit card?” versus “I want to block my credit card.”

Since each of these user messages will lead to a different response from the bot, your initial approach might be to create separate RASA intents for each card type.

e.g. credit_card_block and debit_card_block.

However, these intents are trying to achieve the same goal (blocking the card) and will likely be phrased similarly, which may cause the model to confuse these intents.

To avoid intent mismatch, group these training examples into a single card block intent and make the response depend on the value of a categorical card_type slot that comes from an entity.

This also makes it easy to handle the case when no entity is provided, e.g. “How do I block my card?” For example:

stories:

- story: block my debit card

steps:

- intent: block_card

entities:

- card_type

- slot_was_set:

- card_type: debit

- action: utter_debit_card_block

- story: block my credit card

steps:

- intent: block_card

entities:

- card_type

- slot_was_set:

- card_type: credit

- action: utter_credit_card_block

- story: block my card

steps:

- intent: block_card

- action: utter_ask_card_type

Extracting entities

Common entities such as names, addresses, and cities require a large amount of training data for an NLU model to generalize effectively.

RASA provides many options for entity extractions, it gets quite difficult to understand which method to use when.

Let’s drill down on a few of them.

RASA framework provides two great options for pre-trained extraction models: first is the DucklingEntityExtractor and SpacyEntityExtractor.

Because these extraction models have been pre-trained on a very large corpus of data, you can use them to extract the entities they support without the need of annotating them in your training data.

Apart from these pre-trained extractions, RASA provides tools for extraction of entities.

First up, Regexes!

A RegEx, or Regular Expression, is a sequence of characters that forms a search pattern (but I assume you already know that).

RASA provides provision to set regexes against any entity.

Regexes are useful for performing entity extraction on structured patterns such as 11-digit bank account numbers.

Regex patterns can be used to generate features for the NLU model to learn, or as a method of direct entity matching.

To set this up follow the below example:

Data look like this in your nlu.md file

## intent:inform

- [AB-123](customer_id)

- [BB-321](customer_id))

- [12345](transaction_id)

- [23232](transaction_id)

## regex:customer_id

- \b[A-Z]{2}-\d{3}\b

## regex:account_number

- \b\d{11}\b

And the config file

language: "en"

pipeline:

- name: "SpacyNLP"

- name: "SpacyTokenizer"

- name: "SpacyFeaturizer"

- name: "RegexFeaturizer"

- name: "CRFEntityExtractor"

- name: "EntitySynonymMapper"

- name: "SklearnIntent

Second, Lookup Tables!

You can think of look up tables as databases of information, which you can set against any entity for matching. Lookup tables are processed as a regex pattern that checks if any of the lookup table entries exist in the training example. Similar to regexes, lookup tables can be used to provide features to the model to improve entity recognition, or used to perform match-based entity recognition. Examples of where you can use lookup tables are types of Apple IPhone models, a la carte menu options or ice cream flavours.

Lastly, Synonyms!

Adding synonyms or similar words to your RASA NLU training data is helpful for mapping certain user query words to a solitary standardized entity. Synonyms, however, are not meant for improving your model’s entity recognition and have no effect on NLU performance.

A good use case for synonyms is when normalizing entities belonging to distinct groups. For example, a virtual assistant that asks users what type of loan they’re interested in, they might respond with “house loan,” “I need home loan,” or “housing.” It would be a good idea to map house, home, and housing to the normalized value house so that the processing logic will only need to account for a narrow set of possibilities.

Take care of Misspellings!!

Running over incorrect spellings is inescapable, so your bot needs a successful method to deal with this.

Remember that the objective isn’t to address incorrect spellings, however, to accurately distinguish intents and entities.

Consequently, while a spellchecker may appear to be an apt arrangement, changing your featurizers and training data regularly are adequate to represent incorrect spellings.

Adding a character-level featurizer gives a viable protection against spelling mistakes by representing portions of words, rather than just entire words.

You can add character level featurization to your pipeline by utilizing thechar_wbanalyzer for theCountVectorsFeaturizer

Notwithstanding character-level featurization, you can add basic incorrect spellings to your training data.

Conclusion

Apart from the above points, generally speaking if there’s any downside to RASA’s feature rich framework, it might be the steep learning curve with respect to how these features work in tandem. As some other chatbot developers have noted, Rasa isn’t necessarily for beginners. Unless you’ve built chatbots before using other chabot development frameworks, Rasa’s framework might strike you as a bit intimidating at first.

Having said that, there are some great tutorials and material available for the beginners to start working on this fabulous framework.

Most importantly it has amazing community support.

A diverse group of makers and conversational AI enthusiasts. Where people of different backgrounds and locations help each other to understand the framework and create better AI assistants.